Traditional computers typically refer (through visual affordances) to both the perceived and actual properties of the interface – suggesting not only fundamental functionalities but also determining and communicating how humans might possibly use the system.

Such rich information visualization may, however, not suit the way we want pervasive computers and computational everyday environments to look and feel. We aim to create novel interactive experiences, that exploit multisensory illusions in order to extend the range of interface properties that can be displayed, using only everyday object surfaces as interfaces.

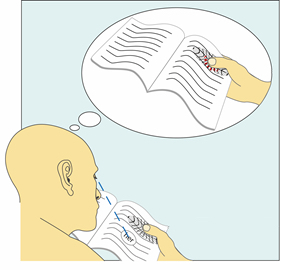

In a manner similar to the “rubber hand illusion”, in which people can be induced to perceive a physical touch based purely on what they see, we will support visual and haptic feedback induced by augmented vision and sound. Instead of changing the objects’ physicality, we will visually and auditory augment them using “smart glasses” and projectors, while at the same time augmenting them haptically by inducing multisensory illusion. Technically this includes sensing user interaction using machine learning tools and multimodal presentation of information.

Further information: project website

Projektleitung:

Prof. Dr.-Ing. Katrin Wolf

Projektpartner

Ludwig-Maximilians-Universität München, Institut für Informatik, LFE Medieninformati, Prof. Dr. Albrecht Schmidt

Mittelgeber

DFG SPP 2199: Skalierbare Interaktionsparadigmen für allgegenwärtige Rechnerumgebungen

Laufzeit

seit 2020

Kontakt