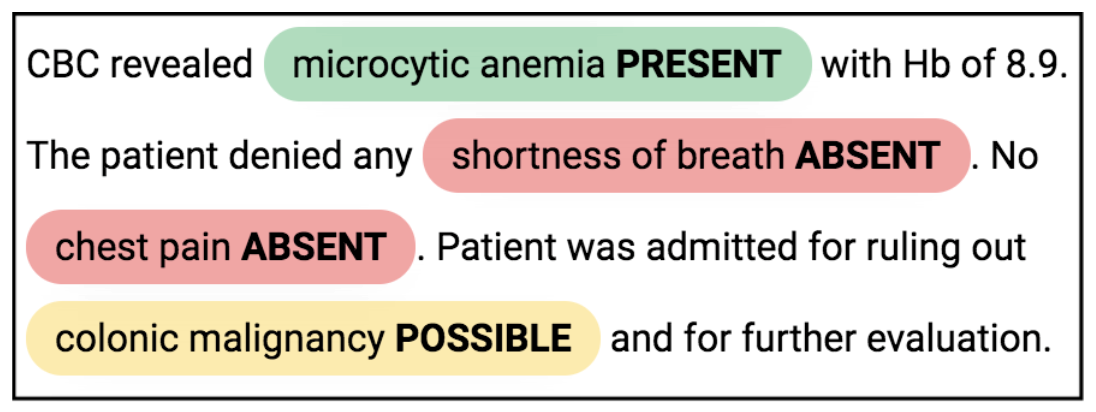

In order to provide high-quality care, health professionals must efficiently identify the presence, possibility, or absence of symptoms and treatments in free-text clinical reports. The task of assertion detection is to classify entities (symptoms, treatments, etc.) into present, possible, or absent based on textual cues in unstructured text.

We evaluate state-of-the-art medical language models on the task and show that they outperform the baselines in all three classes. Since transferability is especially important in the medical domain, we study how the best performing model behaves on unseen data from two other medical datasets from differing fields. For this purpose, we introduce a newly annotated set of 5,000 assertions for the publicly available MIMIC-III dataset. We conclude with an error analysis that reveals data inconsistencies and situations in which the models still go wrong.

Publications

Betty van Aken, Ivana Trajanovska, Amy Siu, Manuel Mayrdorfer, Klemens Budde, Alexander Loeser. Assertion Detection in Clinical Notes: Medical Language Models to the Rescue? Proceedings of the Second Workshop on Natural Language Processing for Medical Conversations @ NAACL 2021

Paper: https://www.aclweb.org/anthology/2021.nlpmc-1.5/

Demo: https://ehr-assertion-detection.demo.datexis.com/

Dataset / Code: https://github.com/bvanaken/clinical-assertion-data

Model Checkpoint: https://huggingface.co/bvanaken/clinical-assertion-negation-bert